Verified Transcripts

Credential Clusters & Paths

Google Data Engineer Learning Path

Dataflow | BigQuery | Dataplex | Gemini AI

AWS Data Analytics Learning Plan

Kinesis | Athena | QuickSight | IoT Analytics

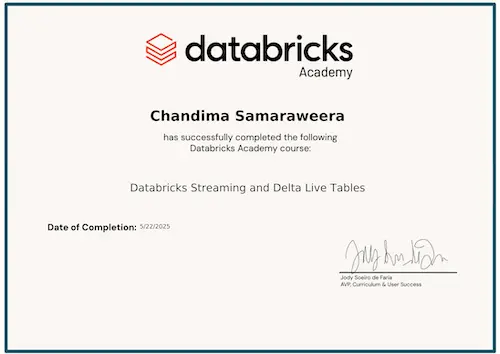

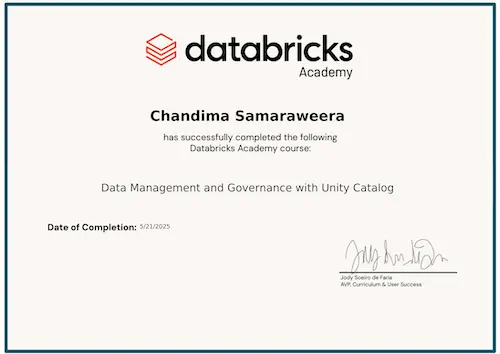

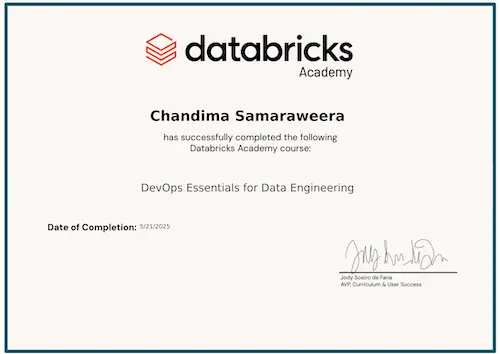

Databricks Data Engineering Learning Path

Delta Lake | Workflows | Unity Catalog

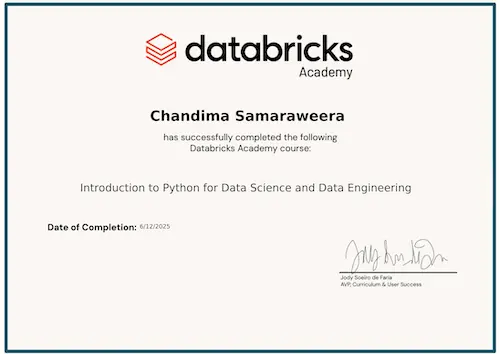

Databricks Apache Spark Learning Path

Spark Core | Streaming | Optimization

Core Engineering Foundations